Discover how the Full-Expectation-Yet (FEY) Approach revolutionizes software testing by focusing on outputs and behavior validation. By ensuring the presence of expected values, this approach enhances test coverage, reliability, and overall software quality. Dive into the key insights, challenges, and benefits of implementing the FEY Approach to unlock the true potential of your testing efforts.

In the realm of software testing, one common challenge is the oversight of defining expected values for every input during test creation. This can lead to incomplete or ineffective test coverage, resulting in undetected issues slipping through the cracks. In this article, we will explore the underlying problems causing this issue and introduce a solution that addresses these challenges.

Typical Problems in Testing:

Specification Gaps: Not every edge case or scenario is explicitly described in the software specification, leaving room for being potentially overlooked during test creation.

Loss of Test Validity: As software undergoes changes, tests may become outdated and lose their relevance, making it difficult to determine their accuracy through visual inspections alone.

Complexity of Software Interfaces: Software systems often have numerous interfaces, making it challenging to define clear expected values for each input at every point in time.

Overwhelming Automation Projects: In large-scale automation projects, testers may overlook situations or test vectors where expected values have not been defined, resulting in incomplete testing coverage.

A Solution – Adding an Additional Monitoring Layer

To address these challenges, we propose implementing an additional monitoring layer that ensures the presence of expected values for each interface.

This is achieved through the creation of a dedicated variable for each interface, initialized with a default value of “false.” This variable is then explicitly and automatically highlighted in the test report. If an expected value is not defined at any given point in a test, the test will automatically fail. This enables testers to quickly identify scenarios, inputs, or situations where expected values have not been defined after each test run.

The approach of focusing on outputs in testing is particularly suitable for:

Safety-Critical Industries: Industries such as automotive, medical, aerospace, and other safety-critical domains where the correct behavior and accuracy of software outputs are of utmost importance.

Development Teams with Complex Software: Teams working on software projects with intricate functionalities, numerous interfaces, and complex calculations that require thorough testing and validation of outputs.

Test Managers and Engineers: Professionals responsible for ensuring the quality and reliability of software through effective testing strategies. This approach provides them with a systematic method to monitor and verify the expected outputs.

Quality Assurance Teams: QA teams seeking to enhance their testing processes by incorporating a comprehensive approach that covers both inputs and outputs, thereby improving the overall test coverage and effectiveness.

Test Automation Experts: Experts in test automation who aim to leverage automation tools and techniques to streamline and optimize the testing process, with a specific focus on outputs and behavior validation.

Areas of applying the approach

The evaluation of outputs/calculations of software forms the core of testing. Whether a test case passes or fails solely depends on the expected values, as these values define the expected behavior of the software. Hence, it is crucial to describe these expected values as comprehensively as possible.

To better understand this fact, here is a short side note

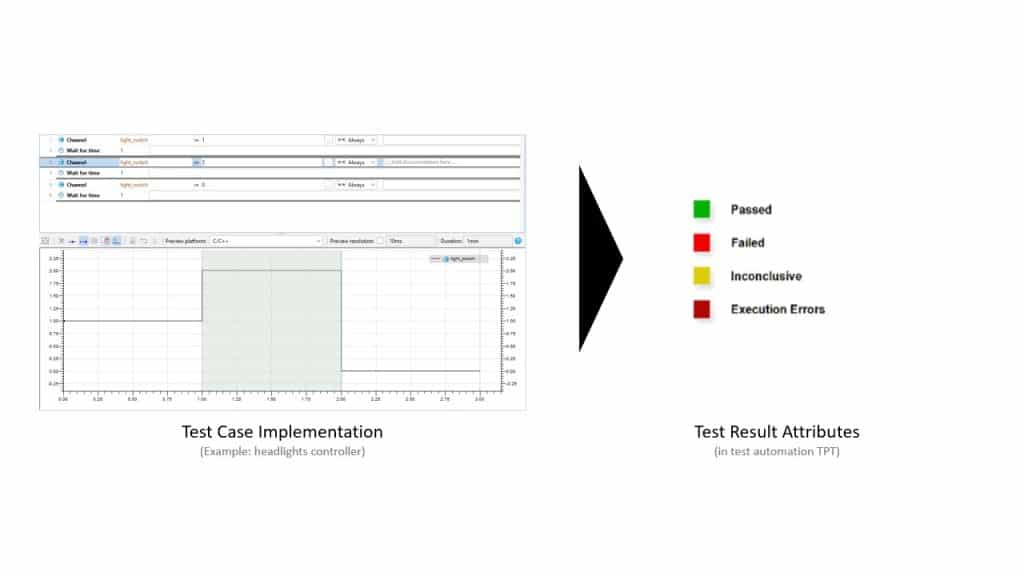

Basic principle in test automations:

- A test case must receive at least one assessment to be evaluated as successful or failed. If no assessment is available, the test case is declared as Inconclusive in TPT.

- A test case is considered successful if all assessments are passed.

- A test case is considered failed if at least one assessment fails.

- If a test case can not be executed, it gets marked as execution error.

Disadvantage of this reasonable logic with a short example.

For a test object with many outputs (and thus functional calculations) a test case is created. The test case contains many test conditions (steps) and stimulates the test object for many situations (high coverage). Now the problem: The test case contains only insignificantly relevant assessments concerning the behavior. Thus, it will be reported as successful, even if the test has very low content or no significance.

▶️ This is very unfavorable. But there is a solution for this. We call this approach Full-Expectation-Yet.

The Full-Expectation-Yet (FEY) Approach in a nut shell:

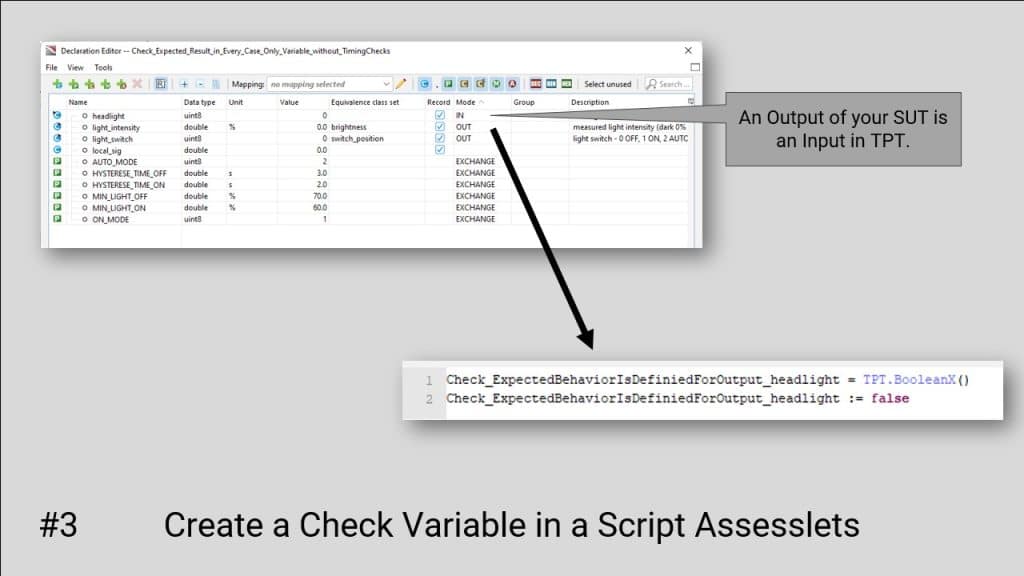

A check variable is created for each output of the system under test. The purpose of the variable is to check whether there is an expected value for an output of a test object at any time.

For each sample (test vector with input data) the default value of the test variable is therefore false. The default value is set to true only if there is a specified expected value of the output.

FYI: In TPT, assessment can be defined in test data independent, custom entities. Assessments are automatically run after test execution.

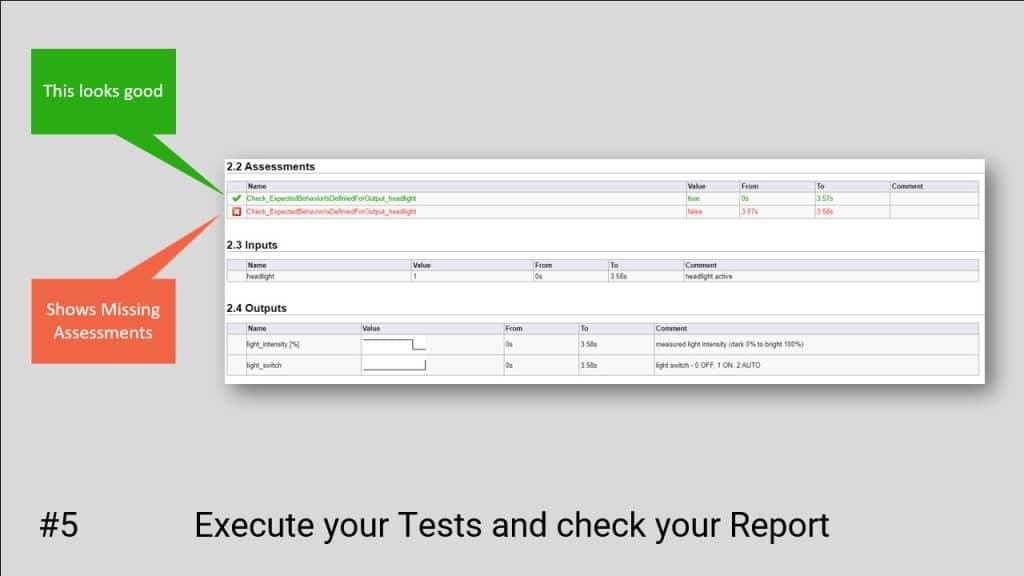

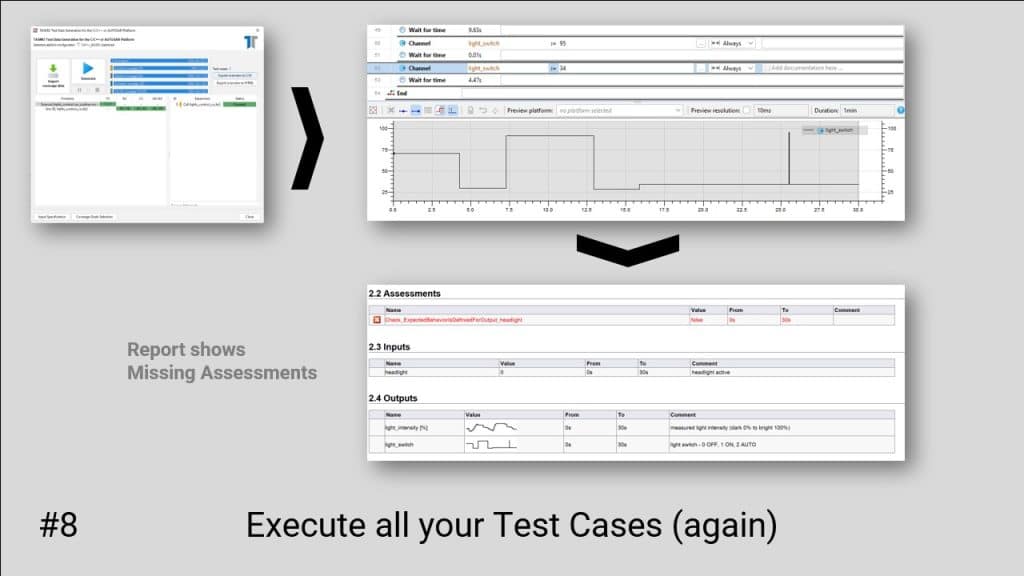

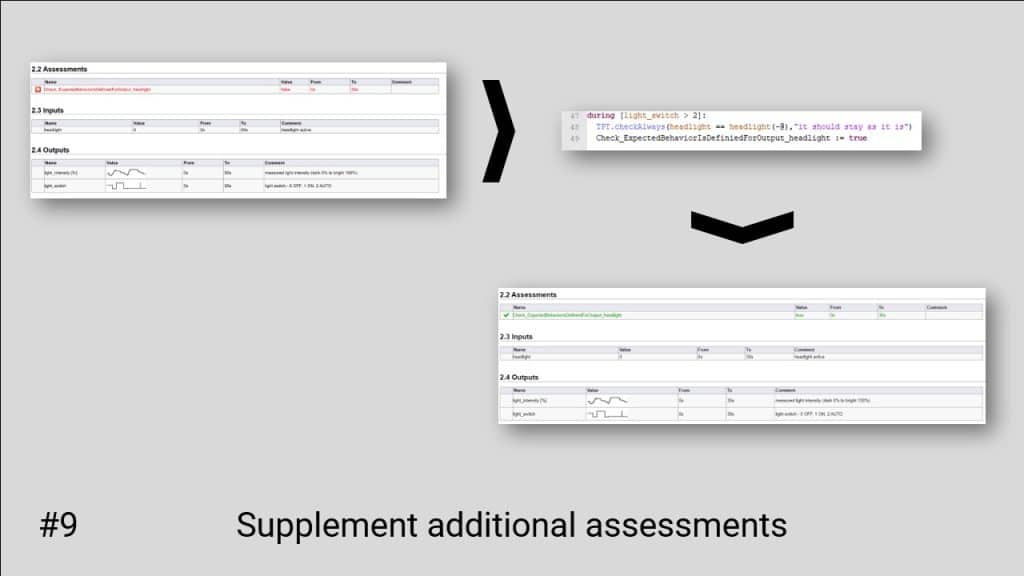

An evaluation of the variable is automatically performed to generate the report. If there are time intervals (samples) in which no expected values are present, the variable retains the default value (false) and the test case fails. In this case, expected values are missing for the test object, which can be supplemented by the tester.

3 steps to implement the FEY Approach:

- Step 1 – Create a variable for each output of the test object

- Step 2 – Define each variable to start with the value false

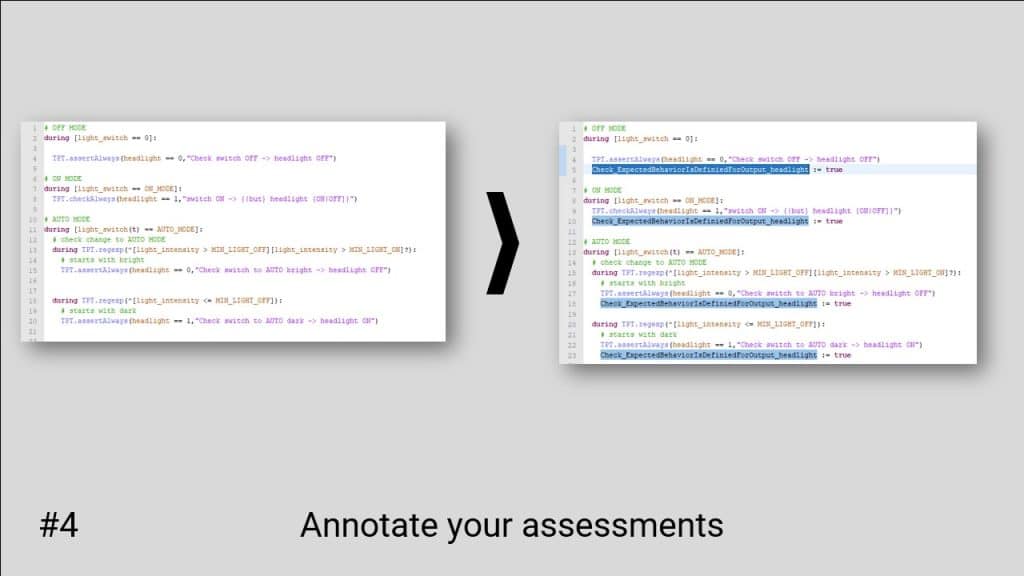

- Step 3 – Set the corresponding variable with the value true at each assessment of the expected value

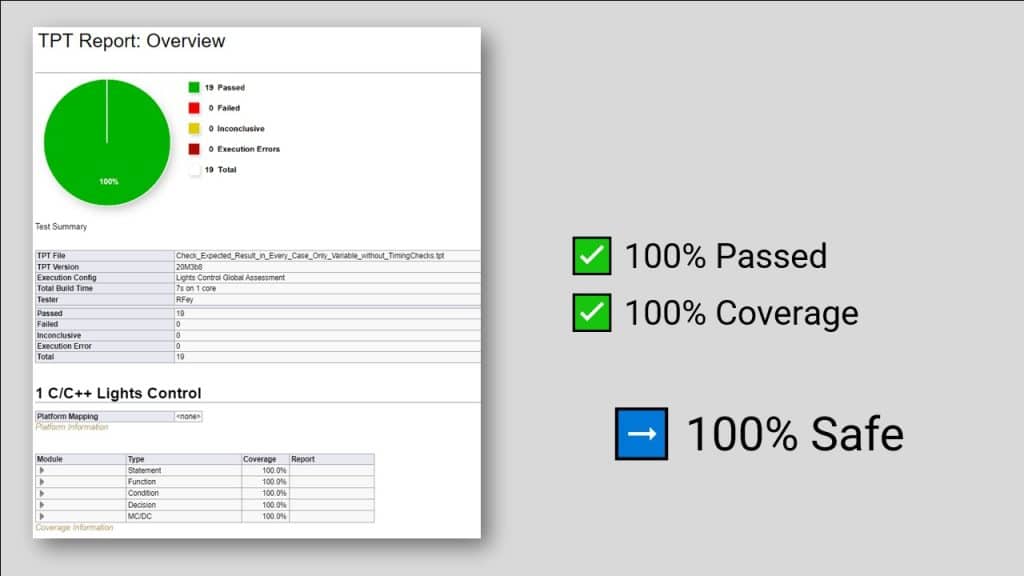

The Result

Since for each output a variable initially has the value False and is only set to true if there is a test of the output, tests fail if there are stimulations of the test object that show a behavior that has not (yet) been specified in the test.

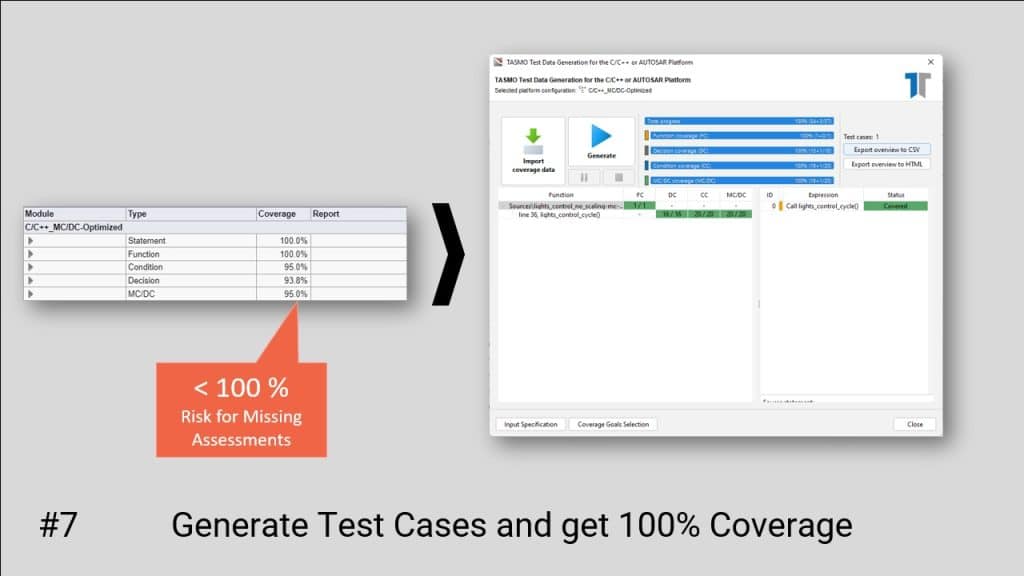

To check as many situations as possible, we recommend using the code coverage metric Modified-Condition-Decision-Coverage (MC/DC).

Real-World Example

To showcase the practicality and effectiveness of the FEY Approach, let’s consider an example in the automotive industry. Imagine a development team working on an advanced driver assistance system (ADAS) for a self-driving car.

By implementing the FEY Approach, the team can create dedicated variables for each output, such as collision detection, lane departure warnings, and adaptive cruise control. With clear expected values defined for each output, the team can comprehensively test the behavior and accuracy of these critical functionalities.

This ensures that the ADAS system performs reliably, providing enhanced safety for passengers and other road users. Such examples highlight the tangible benefits and real-world application of the FEY Approach in industries where software behavior is of utmost importance.

This methodical approach ensures that

- all situations / scenarios are considered in the test

- for each situation and each outcome there is an expected value in the test

- in case of changes in the test object, the validity of all tests is ensured

Important: In the current implementation there is no direct coupling between outputs and the test variables. The implementation must therefore be checked for incorrect use in the course of a review.

What do you need for implementation?

All you need is a test automation with the following functionalities:

- Measurement of code coverage (at least decision coverage, better MC/DC)

- Test data independent definition of expected values

- discrete-time assessments (at least one assessment per sample)

- holistic assessments per test run

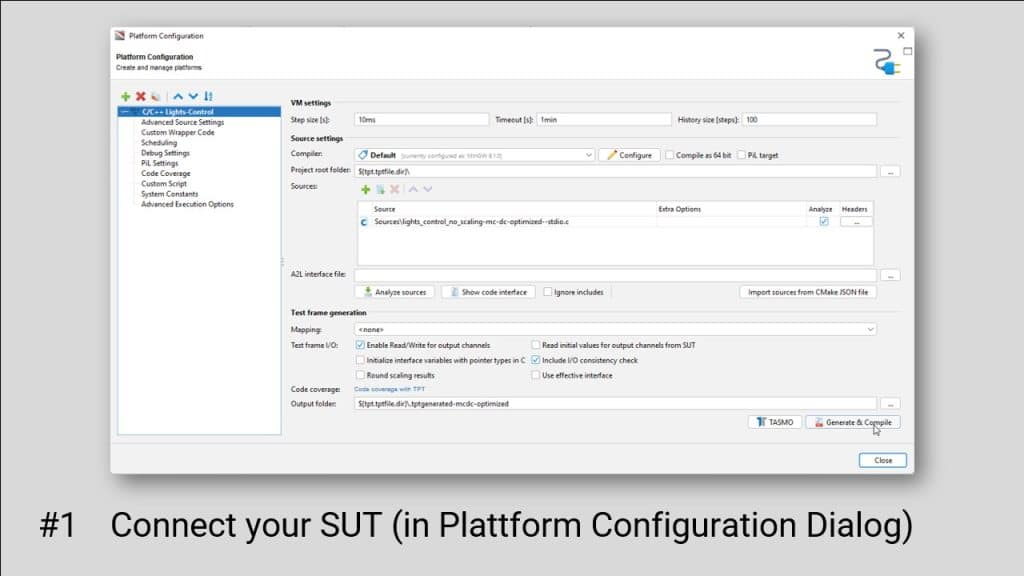

Step-by-Step Implementation of FEY Approach (using TPT)

Reference implementation using the example of Lights Control

- Connect your System under Test

- create assessemets that evaluate the behavior

- create test data (in best case based on requirements)

- implement the monitoring layer

- record interfaces of the test object

- create check variables for each output

- extend assessments by check variables

- run tests and check coverage (Decision or MC/DC) -> With our module TASMO you can automatically generate test data that stimulates all paths and conditions through the code.

- add test data to achieve 100% code coverage

- create additional assessments, if check variable shows that for some test data no expected values are defined.

Strength and Weaknesses of FEY Approach

Strengths of the FEY Approach

- Ensuring the validity of tests (for each situation there is a clear expectation for the test item)

- Increase of security by detection of specification gaps in combination with coverage measurements, e.g. for driver assistance functions with a high number of variants

- very simple and understandable implementation -> easy verification in review

- This approach is compatible and complements very well the method of ensuring test case & requirement traceabilty

Weaknesses of the FEY Approach

- Significance is diminished if the implementation is misused (safeguarding by reviewing the implementation).

- Failure to detect contradictory requirements, e.g. multiple expected values for the same outcome for the same situation (ensured by general testing approach – different expected values for identical test vector lead to at least one failed assessment)

- no consideration of parameterizations, if behavior of code is influenced by parameters (assurance by multiparameter executions)

Summary

In this article, we explored the challenges associated with defining expected values in software testing and introduced a solution called Full-Expectation-Yet (FEY) Approach. The core of testing lies in evaluating the outputs and calculations of the software, and the presence of expected values is crucial in determining the success or failure of test cases.

The FEY Approach addresses the shortcomings of traditional testing methods by adding an additional monitoring layer. It involves creating dedicated variables for each output of the system under test, initialized with a default value of “false.” These variables are then evaluated during test execution, and if an expected value is not defined, the test case fails. This approach ensures that all situations and outcomes are covered in the tests, providing a systematic method to monitor and verify the expected outputs.

The FEY Approach is particularly suitable for industries with safety-critical developments, development teams working on complex software projects, test managers and engineers responsible for ensuring quality, and quality assurance teams seeking to enhance their testing processes. By focusing on outputs and behavior validation, this approach improves the overall test coverage, effectiveness, and reliability.

While the FEY Approach offers several strengths, such as ensuring test validity and detecting specification gaps, it also has weaknesses. Misuse of the implementation, failure to detect contradictory requirements, and limited consideration of parameterizations are some of the challenges that need to be addressed.

By implementing the FEY Approach, software testing can be revolutionized, leading to more comprehensive and effective testing practices that contribute to improved software quality and reliability.