Home » TPT » Cloud Testing with TPT: Case Study with AGSOTEC » Technical Aspects in Cloud Testing » Implementation Case 1

Implementation Case 1

Implementation Case 1

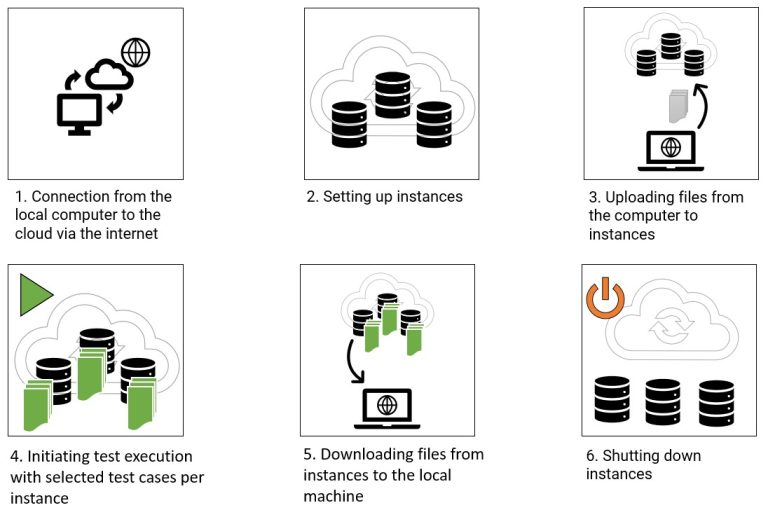

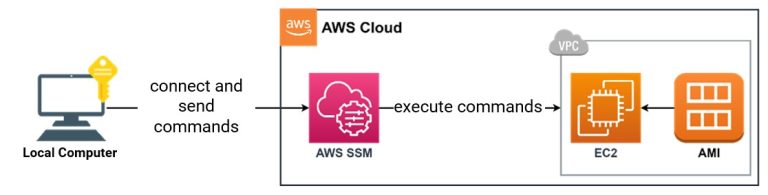

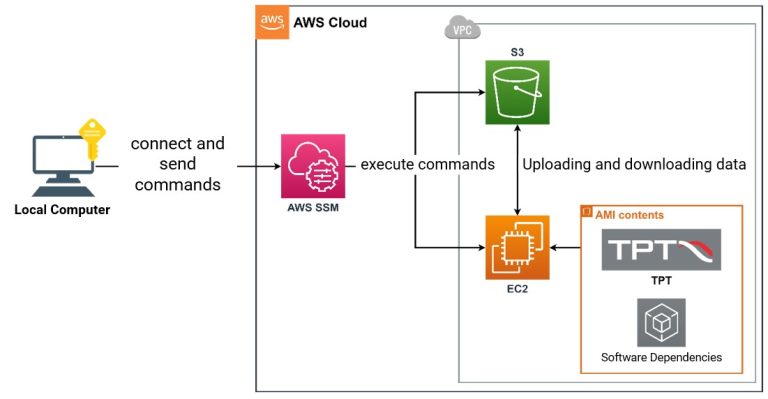

We are investigating the reduction of test execution time to a minimum by utilizing multiple parallel computing units. In this scenario, the user initiates the test run initially.